Parallelism in .NET – Part 5, Partitioning of Work

Posted

by Reed

on Reed Copsey

See other posts from Reed Copsey

or by Reed

Published on Tue, 26 Jan 2010 17:34:56 +0000

Indexed on

2010/12/06

17:00 UTC

Read the original article

Hit count: 1347

When parallelizing any routine, we start by decomposing the problem. Once the problem is understood, we need to break our work into separate tasks, so each task can be run on a different processing element. This process is called partitioning.

Partitioning our tasks is a challenging feat. There are opposing forces at work here: too many partitions adds overhead, too few partitions leaves processors idle. Trying to work the perfect balance between the two extremes is the goal for which we should aim. Luckily, the Task Parallel Library automatically handles much of this process. However, there are situations where the default partitioning may not be appropriate, and knowledge of our routines may allow us to guide the framework to making better decisions.

First off, I’d like to say that this is a more advanced topic. It is perfectly acceptable to use the parallel constructs in the framework without considering the partitioning taking place. The default behavior in the Task Parallel Library is very well-behaved, even for unusual work loads, and should rarely be adjusted. I have found few situations where the default partitioning behavior in the TPL is not as good or better than my own hand-written partitioning routines, and recommend using the defaults unless there is a strong, measured, and profiled reason to avoid using them. However, understanding partitioning, and how the TPL partitions your data, helps in understanding the proper usage of the TPL.

I indirectly mentioned partitioning while discussing aggregation. Typically, our systems will have a limited number of Processing Elements (PE), which is the terminology used for hardware capable of processing a stream of instructions. For example, in a standard Intel i7 system, there are four processor cores, each of which has two potential hardware threads due to Hyperthreading. This gives us a total of 8 PEs – theoretically, we can have up to eight operations occurring concurrently within our system.

In order to fully exploit this power, we need to partition our work into Tasks. A task is a simple set of instructions that can be run on a PE. Ideally, we want to have at least one task per PE in the system, since fewer tasks means that some of our processing power will be sitting idle. A naive implementation would be to just take our data, and partition it with one element in our collection being treated as one task. When we loop through our collection in parallel, using this approach, we’d just process one item at a time, then reuse that thread to process the next, etc. There’s a flaw in this approach, however. It will tend to be slower than necessary, often slower than processing the data serially.

The problem is that there is overhead associated with each task. When we take a simple foreach loop body and implement it using the TPL, we add overhead. First, we change the body from a simple statement to a delegate, which must be invoked. In order to invoke the delegate on a separate thread, the delegate gets added to the ThreadPool’s current work queue, and the ThreadPool must pull this off the queue, assign it to a free thread, then execute it. If our collection had one million elements, the overhead of trying to spawn one million tasks would destroy our performance.

The answer, here, is to partition our collection into groups, and have each group of elements treated as a single task. By adding a partitioning step, we can break our total work into small enough tasks to keep our processors busy, but large enough tasks to avoid overburdening the ThreadPool. There are two clear, opposing goals here:

Always try to keep each processor working, but also try to keep the individual partitions as large as possible.

When using Parallel.For, the partitioning is always handled automatically. At first, partitioning here seems simple. A naive implementation would merely split the total element count up by the number of PEs in the system, and assign a chunk of data to each processor. Many hand-written partitioning schemes work in this exactly manner. This perfectly balanced, static partitioning scheme works very well if the amount of work is constant for each element. However, this is rarely the case. Often, the length of time required to process an element grows as we progress through the collection, especially if we’re doing numerical computations. In this case, the first PEs will finish early, and sit idle waiting on the last chunks to finish. Sometimes, work can decrease as we progress, since previous computations may be used to speed up later computations. In this situation, the first chunks will be working far longer than the last chunks. In order to balance the workload, many implementations create many small chunks, and reuse threads. This adds overhead, but does provide better load balancing, which in turn improves performance.

The Task Parallel Library handles this more elaborately. Chunks are determined at runtime, and start small. They grow slowly over time, getting larger and larger. This tends to lead to a near optimum load balancing, even in odd cases such as increasing or decreasing workloads.

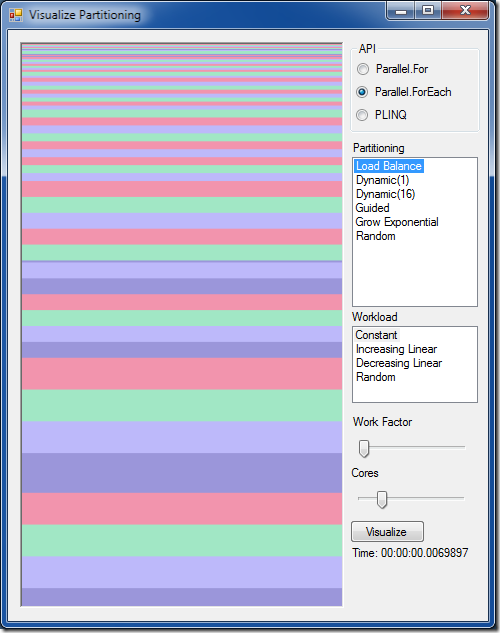

Parallel.ForEach is a bit more complicated, however. When working with a generic IEnumerable<T>, the number of items required for processing is not known in advance, and must be discovered at runtime. In addition, since we don’t have direct access to each element, the scheduler must enumerate the collection to process it. Since IEnumerable<T> is not thread safe, it must lock on elements as it enumerates, create temporary collections for each chunk to process, and schedule this out. By default, it uses a partitioning method similar to the one described above. We can see this directly by looking at the Visual Partitioning sample shipped by the Task Parallel Library team, and available as part of the Samples for Parallel Programming. When we run the sample, with four cores and the default, Load Balancing partitioning scheme, we see this:

The colored bands represent each processing core. You can see that, when we started (at the top), we begin with very small bands of color. As the routine progresses through the Parallel.ForEach, the chunks get larger and larger (seen by larger and larger stripes).

Most of the time, this is fantastic behavior, and most likely will out perform any custom written partitioning. However, if your routine is not scaling well, it may be due to a failure in the default partitioning to handle your specific case. With prior knowledge about your work, it may be possible to partition data more meaningfully than the default Partitioner.

There is the option to use an overload of Parallel.ForEach which takes a Partitioner<T> instance. The Partitioner<T> class is an abstract class which allows for both static and dynamic partitioning. By overriding Partitioner<T>.SupportsDynamicPartitions, you can specify whether a dynamic approach is available. If not, your custom Partitioner<T> subclass would override GetPartitions(int), which returns a list of IEnumerator<T> instances. These are then used by the Parallel class to split work up amongst processors. When dynamic partitioning is available, GetDynamicPartitions() is used, which returns an IEnumerable<T> for each partition. If you do decide to implement your own Partitioner<T>, keep in mind the goals and tradeoffs of different partitioning strategies, and design appropriately.

The Samples for Parallel Programming project includes a ChunkPartitioner class in the ParallelExtensionsExtras project. This provides example code for implementing your own, custom allocation strategies, including a static allocator of a given chunk size. Although implementing your own Partitioner<T> is possible, as I mentioned above, this is rarely required or useful in practice. The default behavior of the TPL is very good, often better than any hand written partitioning strategy.

© Reed Copsey or respective owner